Hello everyone,

The title of this post might give away my age and nerdiness levels, but for many reasons it felt apt, and I just couldn’t help myself.

This week’s themes deal with corporate responsibility, what it means to interact with machines, and how our mental models of chat can get in the way of our success.

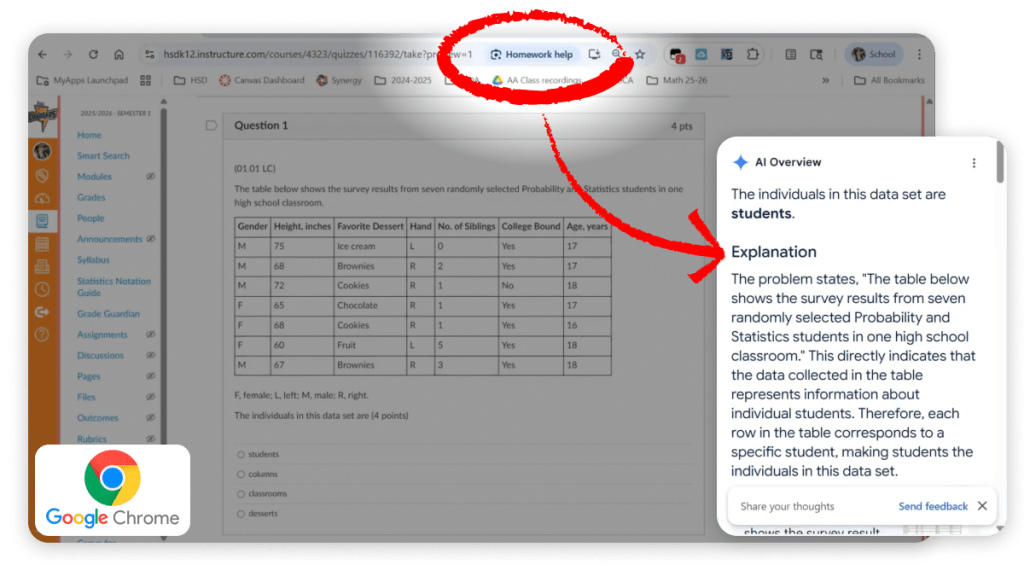

Google decides Chrome should do your homework

I don’t have many words for this one… It’s not even pretending to be a general AI that’s trying to “help” you interpret what’s on the screen. The feature straight up says “Homework help” and it seems to detect when you’re in web apps used by educational institutions like Canvas, Moodle, and D2L. I would link to an official announcement, but I’m guessing this is in beta as right now it’s only online communities that are discussing it, which makes it even more evident to me that this has nothing to do with student well-being. If you’re teaching this semester, you can look through this discussion to see ways some instructors are trying to work with this new function.

In May, OpenAI made ChatGPT Pro free for all students, just in time for their final exams. Now Google’s testing this in the first week of September… What a coincidence that it’s the start of the school year!

And what shame that they pull it out as soon as teachers complain. My fellow UX designers might call this “user testing.” I call it “trying to get away with it.”

OpenAI says LLM hallucinations are your fault

In a nutshell, this new research from OpenAI says “LLMs hallucinate because people don’t like it when machines say ‘I don’t know’ and if we let the machines admit a lack of knowledge, then they won’t hallucinate as much.”

I appreciate the sentiment but there are many reasons this doesn’t make a whole lot of sense. The best way to understand why is to watch this phenomenal explainer video of how LLMs work. I’m not a computer scientist, so take what I say with a grain of salt, but there’s no reason to think that machines that work based on predicting words can actually overcome hallucinations simply by changing the way people evaluate them…

What is noteworthy is how we’re replicating a bias against things that present themselves as experts I don’t know. It’s a conundrum that the media has helped fan the flames of for decades now. Intellectually we know that true experts know when to say “I don’t know” but the average person wants to feel taken care of, and misinterprets “I don’t know” for a lack of expertise.

This gets a mention here because in educational settings, this is a message that we don’t repeat enough. Our assignments don’t let students express their lack of knowledge and on average instructors like to create assignments that have very known boundaries.

So while the research is not the most useful for industry, I still commend them for attempting to understand what’s going on. Meanwhile I think we should largely ignore the results as “computer science” and more as fodder for the analogy of AI being a mirror.

Branches in ChatGPT remind us it’s not human

What is relevant from a technology perspective is the release of “branching” in ChatGPT by OpenAI. It effectively allows you to break a chat at any point and pursue two different ideas in parallel in multiple chats rather than in sequence within one chat.

If you’re trying to help people how to leverage the real benefits of AI, this is the feature you should show them. Why? Because it’s the feature that makes it abundantly clear that these aren’t human-like conversational partners, and using them as such is like using a rocket ship to travel 10 kilometres. Not only is it wasteful and overkill, it’s also counterproductive.

Benj Edwards from the article linked above puts the value of this wonderfully:

There is no inherent authority in AI chatbot outputs, so conversational branching is an ideal way to explore that “multiple-perspective” potential. You are guiding the LLM’s outputs with your prompting. Non-linear conversational branching is just one more feature to remind you that an AI chatbot’s simulated perspective is mutable, changeable, and highly guided by your own inputs in addition to the training data that forged its underlying neural network. You are guiding the outputs every step of the way.

I’ll be recording a video about ways to use this in educational settings soon. Let me know if you have any specific questions or challenges you’d like me to address by replying to this email!

Cheers,

Charlie