Hello,

Four weeks ago, I committed to a tiny experiment for the month of September where I would send 3 weekly learnings.

Today is the last week. Since I started, I’ve also:

- Released my first 20 minute video on YouTube about the problems with ChatGPT’s Study Mode

- Created a Short which goes over a comparison activity teachers can in their class to help students understand their critical thinking abilities

- Gotten over 110,000 views on my TEDx Talk that was released on September 4

A lot of these are part of a larger goal I’m pursuing to better understand how we learn with technology and create new experiences for a new generation of students. Though the road ahead seems quite unclear, for at least the four past weeks, sharing my learnings has helped give me a rhythm to how I digest this information.

Keep your eyes peeled for this new book on teaching with AI

Marc Watkins will be working on a new book with two other authors about teaching with AI. He’s one of my favourite writers on subject, and this book is sure to have some worthwhile fundamentals. They address this head on in their announcement:

This guide, which will go to press in Summer 2026, will inevitably reference technologies or capabilities that have shifted by the time you are reading it.

And yet, how learning works won’t change. The key principles of teaching and learning are well-established. You are a capable teacher, and you are teaching in a new situation. We know the general outlines of what AI can do and what it might be capable of in the future. So, this new situation doesn’t have to be overwhelming. You can use the concepts of good teaching that you already know—or that you will learn through the guide—in order to respond effectively to this brave new world.

Even if you’re not interested in buying something like this, you can gain a lot by following each of the three authors to keep up!

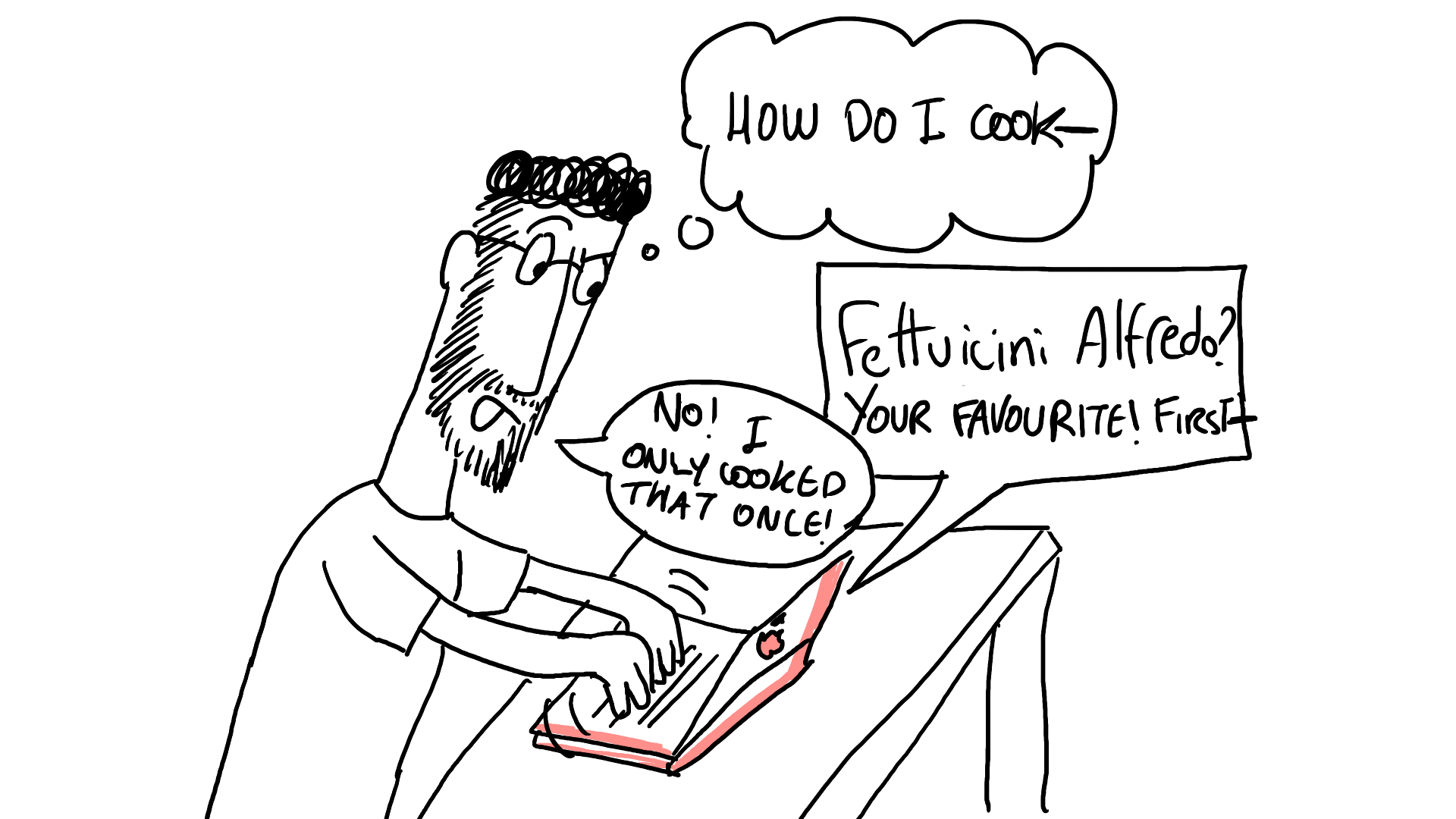

ChatGPT’s new memory might hurt learning and reminds us about the beauty of forgetting

ChatGPT turned on a new memory feature recently that remembers what you said to it across chats, and it forms a model of you to “better tailor its responses”. For some functions this could be useful (though the cost of giving everything to OpenAI hardly seems worth it), but I would argue that for learning this is not a good idea. As Simon Willison points out, chats that have nothing to do with the task at hand start bleeding in and impact the quality of the outputs. I’ve tried to express this before in the context of students who form bad habits around learning, having the AI use those bad habits to perpetuate poor outcomes. This is absolutely not the future of education that we should be asking for. More intention and agency is what’s required and hyper-personalization through memory is not the right direction.

Conversations are collaborative, ChatGPT is supportive. These are not the same thing.

Last week I shared an article about how branching shows us LLMs are not meant to be human-like, and this anthropomorphism has been a thorn in my side since the very beginning. The metaphors we use matter, and here to remind us of this very thing is the incredibly thoughtful piece on human conversation by Eryk Salvaggio. Here’s what resonated most in what he’s saying:

LLMs have certainly transformed our relationship to language and images, but they have not yet revolutionized “intelligence.” On that, they have a long way to go. People keep saying we need to update our definitions of intelligence, and maybe that’s good. It would be more practical, though, to redefine our understanding of a conversation. What used to be a dance of mutual world-building, a means of engaging in imaginative play, is no longer exclusively that.

[…]

Rather than two worlds within minds struggling to describe what those minds contain, as it is in the best of human conversation, a chat with a large language model is a projection of our own thoughts into a machine that scans words exclusively to say something in response.

[…]

AI is a conversation-shaped tool, used to create some of the benefits of a conversation in the absence of another person. But with too much dependency, they risk making real reciprocity, sharing, and vulnerability even rarer. We ought to strive for the opposite: to create meaningful connections to others with our conversations.

In many ways I suppose the definitions of everything are so in flux right now that it’s impossible to even agree on what each of us values. To me, conversations have always been about more than just being heard and getting some outputs. Perhaps to others this is not so important. So, when it comes to learning, how do teachers position their reasoning about why they expect students to use AI in a certain way, when the foundations on which both the student and teacher stand may not be the same.

Perhaps that requires more conversations :).

And with that, my experiment for the month of September comes to an end. Though originally I thought I would continue these shares but with less frequency, I have instead decided to take a long break into November… and perhaps beyond.

I need to recoup. The last few months have been some of the most dissonant in recent memory. Emotions ranging from extreme highs to extreme lows. Hopeless colliding with hopefulness in places that I never expected.

It’s been a real pleasure taking the time to sit and write out what I have learned. I believe it very much deepened my world, and I hope you were also able to take something out of it. If so, I invite you as always to reply to this email and let me know what you learned.

Til November!

Charlie